Machine learning and APIs have something in common. They are both technologies that have been in use for many years but have undergone an evolution recently that increased their adaptability.

Initially, Machine Learning (ML) used rule resolution when developing expert systems. On the other hand, APIs were used as interfaces that factorized different software modules within an enterprise system or a single application.

Machine learning evolved a lot to use neural networks for prediction and pattern recognition which refined the role played by artificial intelligence in transforming businesses.

APIs also evolved, became compliant with the REST protocol, and became accessible in formats such as JSON and XML, giving developers the accessibility to reuse their services.

This shows that these two technologies do not have a lot in common. But how are they able to work together? How does an API gateway for a Machine Learning platform work?

Before we head into all of that, let’s get our basics right:

What is an API (Applications Programming Interface)?

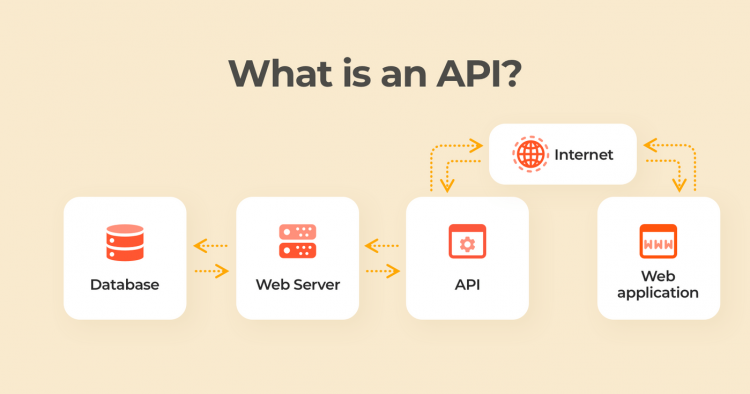

An API is a computing interface that allows applications to communicate and share information. APIs dictate the calls that are made, how they are made, the conventions used, and the data formats used when making the calls and sharing the data.

Developers can access the services behind the interface in a documented, stable, and defined way.

What is an API Gateway?

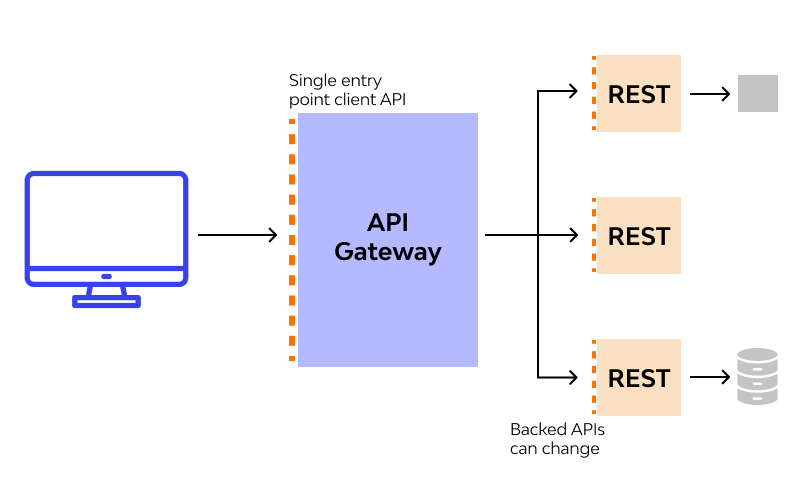

An API gateway can be defined as a middleware sitting between an API’s backend services and its endpoint. The gateway transmits requests from clients to the appropriate application services. It can also be defined as an architectural pattern initially created with the sole purpose of supporting microservices.

An API gateway plays a crucial role in making sure that machine learning platforms, as well as other software platforms, meet all their requirements with regard to the implementation of APIs.

For instance, a few years ago, developers used a monolith approach when developing applications. This approach required all software components to be interconnected. This means that to retrieve data, a client would be required to make a single call.

Through a load balancer (this was an independent hardware device), all requests from clients would be routed to the relevant services. After getting a response, the balancer would then route it to the right client.

It was challenging to update this technology or even implement new and emerging technologies. This is the main reason why the monolith approach has been replaced by the microservices architectural pattern.

What is an API Management Platform

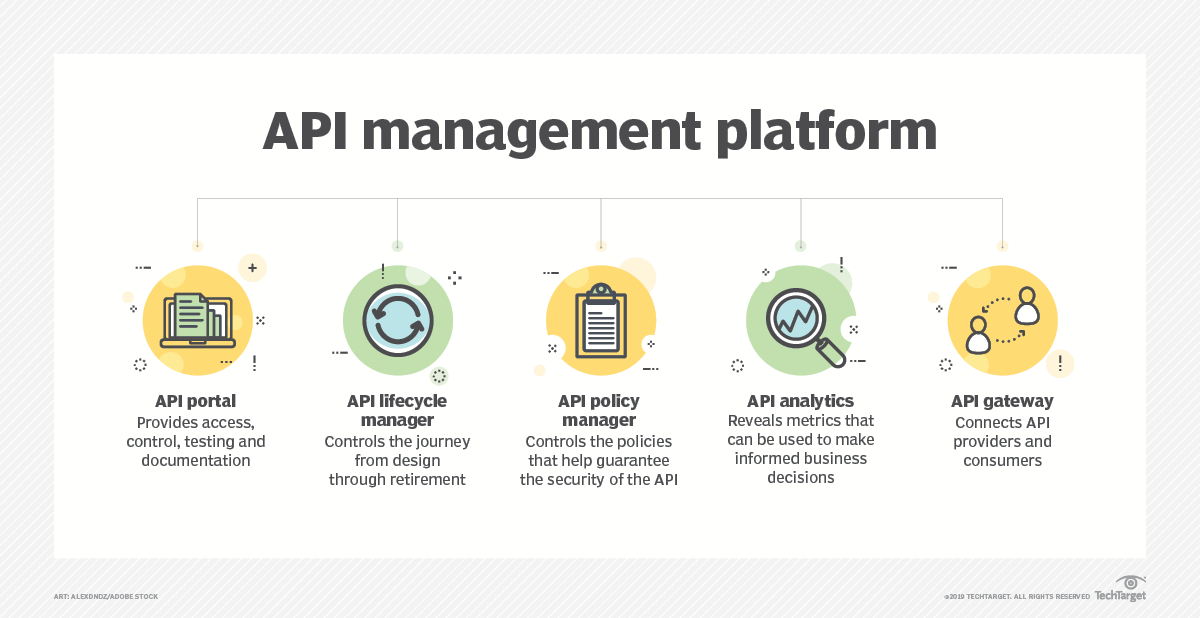

An API management platform is a tool used to access, control, distribute, and analyze APIs used by developers in a controlled environment. API management platforms benefit organizations by unifying control over their API integrations while ensuring they meet high performance and security standards.

The Role of an API Gateway in a Machine Learning Platform

The microservices architectural pattern discussed above is responsible for making sure that all the services in machine learning applications are physically separated. This makes them autonomous and independent.

Each of the microservices comes with its public endpoint, unlike the other approach above, meaning that a client has to make a request directly to the microservice that they need.

This is perfectly fine and works well for small machine learning platforms. However, when enterprise-level machine learning platforms adopt microservices, several problems are witnessed.

They include;

Each of the microservice in your machine learning platform has to be accessed through a public endpoint. This means that the entire system is at risk of different types of cyber attacks. Normally, you should make sure that each of the services has authorization, but how long would this take for a large platform?

If a client wants to perform something as simple as logging into your ML platform, they have to make multiple calls to different microservices. This leads to a high number of round trips between calls, resulting in long waiting times between calls.

With direct communication, all client applications are coupled with internal microservices. Retiring or even updating the latter affects the client applications.

This is where an API gateway comes in for a machine learning platform. By introducing another platform – an intermediary – between APIs and clients, you will easily perform functions such as eliminating tight coupling, implementing effective security measures, and effective load balancing.

The role of the API Gateway is purely technical. In a Machine Learning platform, an API gateway is tasked with the following technical functions;

1. Routing Requests

If you travel out of town and book a hotel, there are different services that you can book from your hotel room. For instance, let us assume that you want to use the gym, make a reservation at the spa, and order breakfast.

You do not have to move to the gym, spa, and kitchen to make reservations and orders. You can simply call the receptionist from your room and tell them what you want. The receptionist will then let the appropriate personnel know about your requirements.

This is done for the convenience of the hotel staff as well as yourself as a customer. An API gateway works in a similar manner. This approach is also known as the reverse proxy. The opposite, a forward proxy, hides the origin of the client by getting data from the public.

The API gateway, on the other hand, retrieves the required internal data while hiding the origin of the server from the client. The gateway provides a single endpoint for machine learning platforms to use. It also makes sure that all requests are redirected to the appropriate internal services.

This way, you will have a single endpoint exposed to the public. The gateway reduces the number of calls by aggregating different responses and sending them at once. This reduces the waiting time discussed above.

2. Working as a Facade

Working as a facade allows an API gateway to have a single interface implemented in front of a machine learning platform to ensure loose coupling is implemented and improve the usability of the platform.

With such a facade, you can easily change and maintain your backend component’s location without affecting your clients.

However, you need to be careful when using an API gateway as a facade for your machine learning platforms. This is because you can find a single entry point becoming a bottleneck, something that can transform it back into using the monolith approach.

When this happens, you should implement a pattern known as the BFF (backend for frontend). This pattern allows your machine learning platforms to use different API gateways based on client applications or business tasks.

3. Translating Protocols

Most modern APIs use the REST framework when responding to their clients. However, what would happen when your machine learning platform uses other formats? For instance, some platforms are still using the SOAP protocol.

Using the API gateway, you can translate any other calls, such as the REST calls into different protocols that are compatible with your machine learning platform. You do not even have to involve developers who can compromise the internal architecture of your machine learning platform.

4. Solving Cross-Cutting Challenges

Even though developers implement microservices because of independence, they are forced to implement the same engineering and make decisions for different microservices many times.

This makes it better for them to offload and consolidate some machine learning platform’s functionalities.

Some of these functionalities include;

A machine learning platform needs to handle all requests efficiently. For this to happen, an API gateway is required to ensure that there is load balancing between service nodes. This is critical in making the platform available during changes and versioning.

A machine learning platform might be deliberately or accidentally overused. You need an API gateway to enforce usage policies to ensure that all services do not exceed the required usage rate.

- Log aggregation and tracing

You need to keep audit logs for your machine learning platform. These logs are important when it comes to analytics, reporting, and debugging.

- Authorization and authentication

You need to protect your machine learning platform from cybercriminals and provide it with basic security. An API gateway acts as a line of defense providing critical functions such as authorization, authentication, validation, identity verification, encryption and decryption, token translation, and antivirus scanning, among others.

Closing Off

In conclusion, it is important to understand the difference between an API gateway and API management are two different things with different applications.

While an API gateway is responsible for technical functions, working as a middleware between the services and endpoints of an API, API management has broader functions.

We hope this article would have been able to explain to you the role of an API Gateway in a machine learning platform.

Source by justtotaltech.com