The ICO said the data use involved in emotion analysis is ‘far more risky’ than traditional biometric tech and presents issues around bias, inaccuracy and discrimination.

A UK regulator has warned organisations to consider the public risks of emotion analysis technologies before implementing them.

The Information Commissioner’s Office (ICO) said they will investigate firms that do not act responsibly or pose a risk to vulnerable people by improperly implementing these biometric systems.

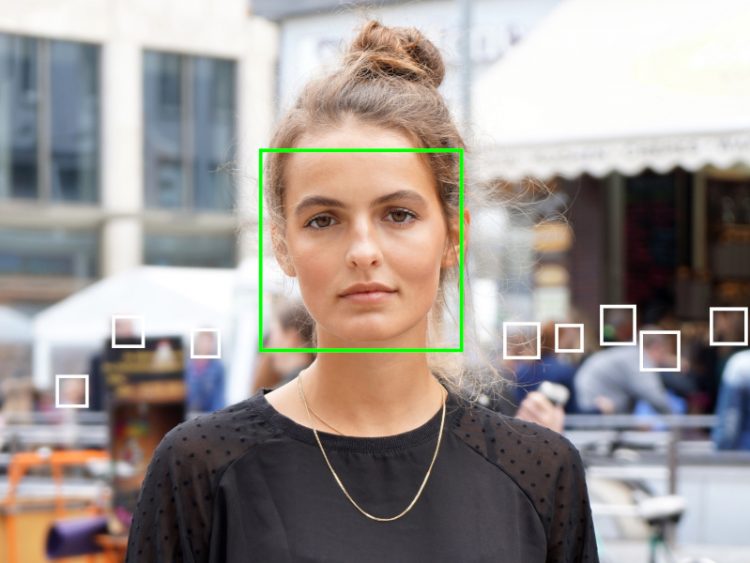

According to the ICO, emotion analysis systems process data such as gaze tracking, sentiment analysis, facial movements, gait analysis, heartbeats, facial expressions and skin moisture. It relies on storing and processing a range of personal data, such as subconscious behavioural or emotional responses.

The watchdog said this type of data use is “far more risky” than traditional biometric technologies used to identify people, with a greater risk of bias, inaccuracy and discrimination.

ICO deputy commissioner Stephen Bonner said developments in the biometrics and emotion AI market are “immature” and may never work.

“While there are opportunities present, the risks are currently greater,” Bonner said. “At the ICO, we are concerned that incorrect analysis of data could result in assumptions and judgements about a person that are inaccurate and lead to discrimination.”

Bonner said the only “sustainable biometric deployments” are those that are fully functional and backed by science. Speaking to The Guardian, he said emotion analysis technology does not seem to be backed by science.

Bonner added that the ICO will continue to “scrutinise the market” and identify stakeholders that seek create or deploy these technologies.

“We are yet to see any emotion AI technology develop in a way that satisfies data protection requirements, and have more general questions about proportionality, fairness and transparency in this area,” Bonner said.

Along with the warning on emotion analysis tech, the ICO said it is developing guidance on the wider use of biometric systems such as facial, fingerprint and voice recognition.

The watchdog plans to release this guidance in Spring 2023 to help businesses using this technology, while highlighting the importance of data security.

“Biometric data is unique to an individual and is difficult or impossible to change should it ever be lost, stolen or inappropriately used,” The ICO said in a statement.

In 2019, a biometric system used by banks, UK police and defence firms was breached, resulting in the personal information of more than 1m people being discovered on a publicly accessible database.

In May, ICCL technology fellow Dr Kris Shrishak spoke to SiliconRepublic.com about the challenges of regulation when it comes to facial recognition technology.

10 things you need to know direct to your inbox every weekday. Sign up for the Daily Brief, Silicon Republic’s digest of essential sci-tech news.

Source by www.siliconrepublic.com